Discover more from Rounding the Earth Newsletter

According to Merriam-Webster,

Theresa "Tess" Lawrie, MBBCh, PhD, made major waves in the medical science community twice this year. A few weeks ago, the director of the Evidence-based Medicine Consultancy group in Bath, UK, urged colleagues to recognize the COVID-19 vaccination program as "unsafe for use in humans" based on a summary of data from the UK's Yellow Card adverse events reporting system (similar to the U.S. VAERS database). But that's a story for another article. The second came in the form of a meta-analysis that concludes that there is now enough evidence to declare with “moderate certainty” (edit July 3, 2021 from “high certainty”) that ivermectin effectively prevents and treats COVID-19.

In the meta-analysis published by Lawrie and the team she worked with, the nearly 100 studies to date (61 peer viewed, 60 with control groups, 31 randomized control trials) are narrowed down to the 15 that fit the group's inclusion-exclusion criteria and quality grading. This work builds on the already circulating meta-analysis published by Kory et al. But given that Lawrie consults for the World Health Organization, her results are harder for institutions to ignore.

The Lawrie study presents an immediate challenge to mass vaccination policies, not to mention the bottom lines of pharmaceutical giants that are reaping in many billions of dollars in profits just to run a large scale human trial under an Emergency Use Authorization (EUA) policy that can only be in place so long as there is no approved treatment. Hers is not the first meta-analysis reporting ivermectin efficacy, but it's the one from a source with credentials that authorities claim are important.

A meta-analysis is considered to be the highest level of scientific evidence---at least when playing by "the rules". This means that neither the WHO nor the FDA would have a credible leg to stand on while not approving or promoting the extremely inexpensive and off-patent ivermectin to protect against and treat COVID-19. Enter Dr. Yuani Roman et al, with a meta-analysis that comes to the opposite conclusion as Lawrie's. Official Reality script writers really know when to ratchet the drama up a notch, am I right?

Dr. Roman seems to be married to one of her coauthors, Adrian Hernandez, as best I can tell. The pair both work out of the Health, Outcomes, Policy, and Evidence Synthesis (HOPES) research group at the University of Connecticut---a job that allowed the couple to move to the U.S. from Europe and South America, respectively. The focus at HOPES seems to be on meta-analyses, and other big picture studies.

Throughout the pandemic, Hernandez and Roman have been busy cranking out meta-studies suggesting the ineffectiveness of medicines at treating COVID-19. Among these is a meta-analysis of HCQ that consistently includes trials that used non-inert placebos, excessive and potentially fatal doses, included a control group in which nearly 40% of the patients were medicated with the drugs being tested, and a lot of late treatment studies that tested hydroxychloroquine in the least effective ways imaginable. The first iteration of that meta-analysis was published just five days after the obviously fraudulent Surgisphere study hit the Lancet (which accounted for the vast majority of the data points meaning it was essentially a re-publication of the Surgisphere paper). The HOPES team certainly seems to have a knack for timing.

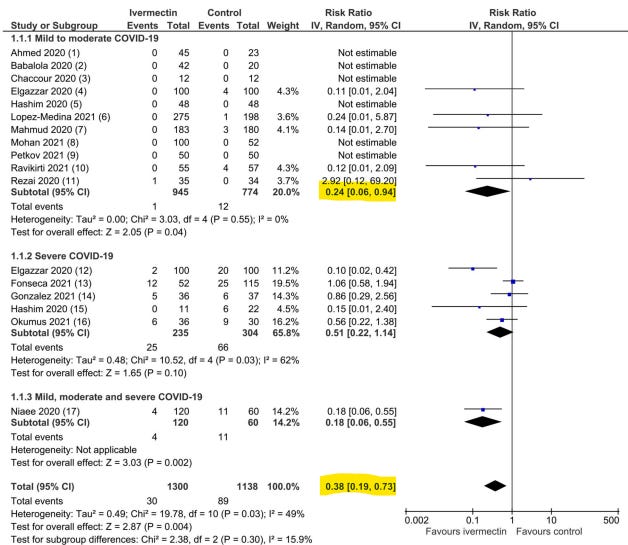

Now, let us examine the HOPES team's new meta-analysis that perhaps stands between ivermectin's acceptance or rejection by public health experts and vaccine fetishists alike. To begin with, the language in the abstract's conclusion is alarmingly anti-science, and leans toward a policy recommendation---not based on whether patients receiving ivermectin died less often in the grouped research (that happened), but on whether that grouped research, arranged as it was, including many mistakes we will identify, showed a "statistically significant" result:

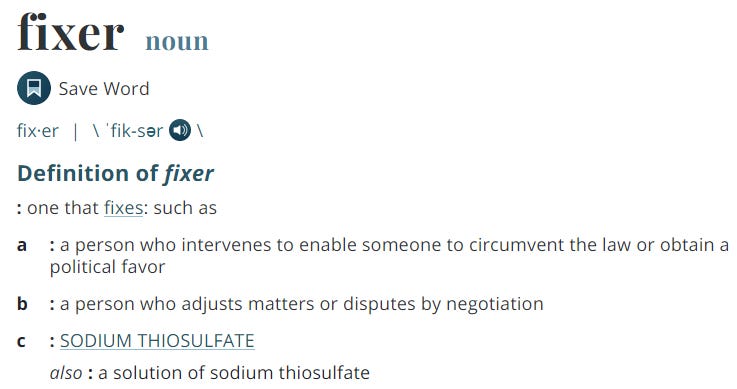

The results section points to an all-cause mortality risk ratio of 0.37 (meaning 63% fewer deaths among those treated with IVM) but with a confidence interval that includes 1, meaning that the result did not reach statistical significance. The correct statement of such a result should not be "failed", but "looks promising, though these results did not [yet] reach statistical significance". That said, I've rarely seen so many important simple data mistakes in a paper, and after simple corrections, and even after ignoring most of the research that might have been included, the results do in fact reach statistical significance.

The introduction of the Roman (HOPES) paper begins with a vague condemnation of misinformation that is entirely disconnected from any of the contents of the paper. Is this a subtle statement that opposition to this paper must necessarily be spreaders of misinformation? Or is it even weirder: a declaration that a paper with worldwide public health implications can be published at will?! In the same paragraph, there is...not lament...just an observation that the use of repurposed drugs has been heavily politicized. Is this a matter of playing the information economics game?

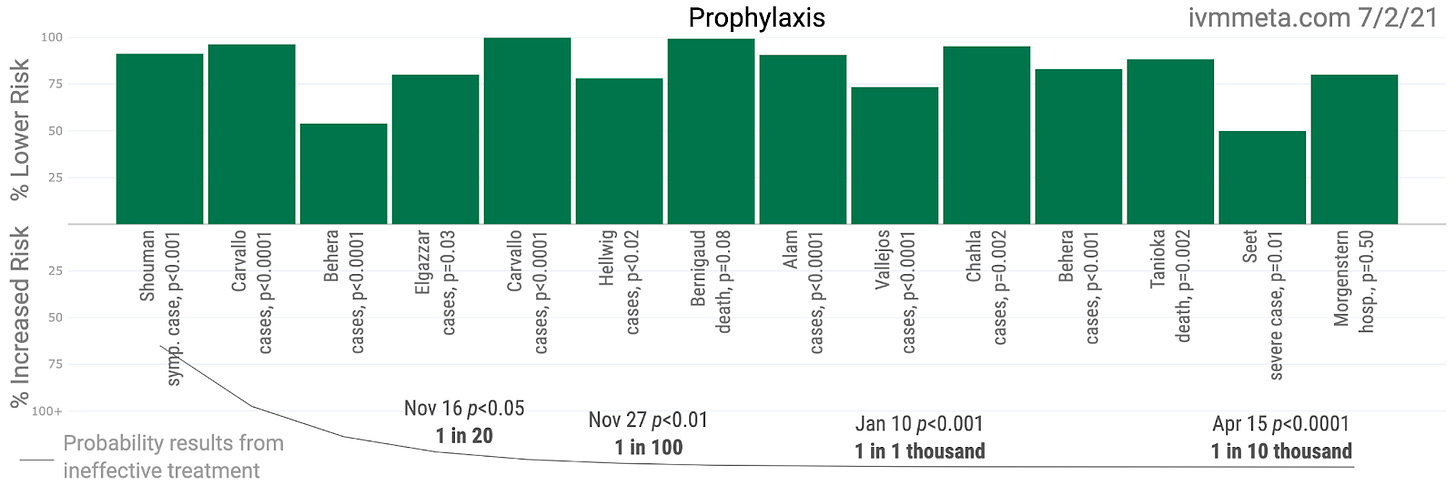

On the next page, the authors discuss the history of meta-analysis of ivermectin's use to treat COVID-19. After mentioning the very early meta-analyses specifically, the authors vaguely reference Lawrie's meta-analysis without naming her (linking to the preprint instead of the final publication) and also the anonymous ivmmeta.com website, both of which (along with Kory's research) currently dominate the recent ivermectin conversation among researchers. This appears to be damning with faint acknowledgement. That's quite bold for a researcher with no previous experience as a first author, and who seems to have not known the evidence well enough to organize the most important numbers into the correct columns. Ask yourself whether anyone (an entire team of scientists, no less) who is well familiar with this body of evidence could make such a mistake that entirely reverses the conclusion without noticing prior to preprint. Whoopsie?

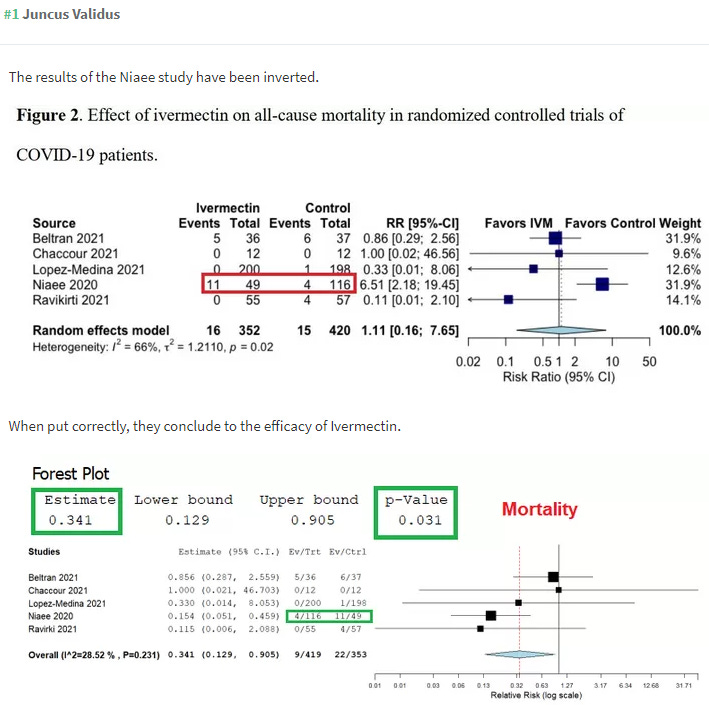

After narrowing down all of the possible studies for inclusion, the HOPES meta-analysis includes only ten out of the several dozen papers published, throwing out most of the larger studies included in Lawrie's meta-analysis, which had already narrowed the pool from Kory's analysis. The HOPES group did not see fit to stratify the results according to time-to-treatment, as I've noted is so crucially important in determining effect sizes based on optimal treatment protocols, which should be the true goal. Instead, the HOPES team assigned extra weighting to the Beltran study on severely ill COVID-19 patients. That weighting alone can make the difference between statistical significance and lack of it (or as the conclusion strangely put it, "did not reduce all-cause mortality"). And as Juncus Validus points out above, the unweighted data does reach statistical significance after the correction. Yet, the HOPES team's final paper declares otherwise...

Roman did however include the recent small study by Kroleweicki et al that declares itself (in the title) to be a "proof-of-concept" trial. To be clear, the write-up is well presented, but that's not what makes a trial high quality evidence [for a given purpose]. With so few participants, the Kroleweicki study did not find much in the way of serious effects such as hospitalization or mortality as defined in usual meta-analyses, but did find a statistically significant difference in viral loads among those patients with substantial ivermectin plasma concentration! Roman and her team neither mention this observed effect, nor correct for it. The result is suggestive of efficacy, but ultimately the HOPES team only uses it as part of small grouping of studies looking for adverse events. Somehow that focus seems less important than measuring effects of prophylaxis for which there are 14 studies to choose from, with nearly 9,000 patients. The authors gave no critical reason for exclusion, though any conversation about experimental design took place with a knowledge of where those studies stand, so there was on real prospective/retrospective distinction.

Unless I missed it, the Kroleweicki study also did not observe adherence levels to the medication, which is nearly always part of a study with intentions beyond "proof-of-concept". Such observation could settle questions that remain.

While Roman, Hernandez, and crew discarded most all of the larger ivermectin trials, they included the Lopez study out of Colombia. This is another study with a professional-looking write-up that seems weaker as a form of good evidence. It may be a great example of a caring researcher having the machinery around him go awry. Among other flaws, the study failed to isolate the effects of ivermectin by allowing participants to seek treatment outside of the trials. The protocols also changed twice throughout the three-week trial. Those changes included the placebo (including its observed physical properties, which might have encouraged more or fewer participants to seek additional treatment at different phases), and also whether or not trial participants from the same household could participate. Changing rates of side effects throughout the trial do seem indicative of changing treatment regimen. Even worse...during the study, a pharmacist noticed the mislabeling of ivermectin as placebo! Could that mislabeling have happened again, without being caught, leading to a false results set? The side effects profiles seem to indicate that the mistake was not repeated, but it's very hard to know.

There are multiple reanalyses of the Lopez study that go deeper into the flaws and recompute results. One was written by scientist David Wiseman with the aim of correcting for at least some of the many messy variables. The Lawrie study also includes the Lopez trial, but with more data, the potential flaws in the study do not as much affect the outcomes. Removing it would not change the overall picture of results by so much. But the larger problem with the HOPES trial seems to be a knack for excluding most all of the trials with the best results while finding ways to leverage results from those least favorable to an overall picture of efficacy. Perhaps those many excluded trials have even more problems? In my reading (I'm approaching having read half of them), I would say that there are problems in some of them as there generally are in most all trials, but the arrangement of the research and data in the HOPES meta-analysis strikes me as the most problematic aspect of any of this research.

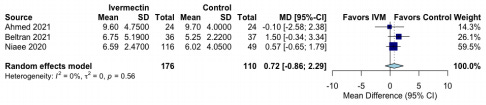

Another study included in both meta-analyses is the Niaee trial. However, ivermectin is not tested against placebo alone in any arm of the Niaee trial, so it fails to meet the authors' stated inclusion-exclusion criteria. Instead, it is tested against another medication (HCQ to be specific). Technically, the Niaee trial includes one arm of 30 patients who received HCQ along with a placebo (the purpose of which is unclear since there was no HCQ+IVM arm), but the HOPES team treats 30 patients receiving HCQ and 30 receiving HCQ+placebo as if all any of them took was placebo. To any degree that HCQ helped patients, that dampens the results with respect to ivermectin. This matters less in the Lawrie study where results reach statistical significance regardless. But it matters a great deal in the HOPES team study where it gets weighted heavily (somehow as 41% even though it represents 22% of the data pool) due to the total exclusion of so much available data. But the worst mistake appears to be that the hospital durations used by Roman and Hernandez seem to be pulled out of thin air with a false suggestion that patients in the control group were discharged earlier.

According to Niaee, patients on ivermectin saw oxygen saturation return to normal much faster, and they were discharged faster. From the conclusion: "Ivermectin as an adjunct reduces the rate of mortality, time of low O2 saturation, and duration of hospitalization in adult COVID-19 patients. The improvement of other clinical parameters shows that ivermectin, with a wide margin of safety, had a high therapeutic effect on COVID-19." The numbers used above in the HOPES meta-analysis seem pulled out of nowhere so far as I can tell. Here is Niaee's table:

Somehow the HOPES team used the data from the Karamat trial to suggest that ivermectin patients were not achieving faster viral clearance than patients not on it. That seems strange given the conclusion of Karamat's team: "In the intervention arm, early viral clearance was observed and no side effects were documented. Therefore ivermectin is a potential addition to the standard of care of treatment in COVID-19 patients."

The numbers Roman and her team use here are the numbers of patients in each arm who achieved viral clearance between days 3 and 7. The actual numbers of patients who achieved viral clearance by day 3 were 17 in the treatment arm vs. just 2 in the control arm. In total, 37 of 41 (90.2%) patients achieved viral clearance by day 7 vs. 20 of 45 in the control arm (44.4%). The RR should be 2.03, not 1.22. Not only that, but the Podder trial results above completely misrepresent the study. These measurements excluded the patients not still in the hospital on day ten, of which there were more in the ivermectin arm than the control arm. These corrections push the RR from 0.97 (even that isn't computed correctly) to 1.23, and that result is in fact statistically significant.

The inclusion of the Beltran study seems like cherry-picking---particularly given the many exclusions of perfectly reasonable studies to choose from. It was a small trial performed on late stage COVID-19 patients with high incidence of comorbidities. This qualifies as a test under worst conditions, not a study of optimal medical usage. Adding weight to the study beyond its proportional representation seems egregious.

I could go on and on. This article is already long, and I have numerous items left on my notepad. But after 20 hours of reviewing the research to write this article, all I can say is that I found nothing at all to redeem the HOPES team or their meta-analysis. I'd like to paint all this in the least negative way possible, but it's hard. Perhaps these are clumsy scientists who do not understand that meta-analysis does not take place without understanding the evidence, and that describing evidence differently from the researchers who published is a sign that you're not evaluating their work. Perhaps this is the tale of a group of researchers whose job seems to be cranking out meta-analyses as fast as possible. That strikes me as a terribly dangerous approach to science, but I understand the way that jobs depend on it. These are not at all people who should handle data for publication, much less with influence in policy-making.

But are these just mistakes?

The Roman/Hernandez meta-analysis comes at a politically contentious moment. Their language and behavior appear political. Their work is error-laden, takes research out of its true context, uses numbers that don't seem to come from the actual studies, chooses papers testing ivermectin under the least favorable circumstances, gives unexplained and inappropriate weights to the small amount of data that stands as outliers to the bigger picture, and still drives a conclusion of "don't use this" from a massive average mortality reduction that did not quite reach statistical significance. At the same time the authors consistently complain about the "low quality of evidence" represented by the studies they do and do not include, nearly all of which I would describe as produced by higher quality scientists who can at least tally numbers correctly. And a medical journal published all this---just in time to push back the Lawrie case. Think on all that for a moment.

Very good analysis of the disgraceful meta-analysis. Well written and researched. How do so-called academic journals get away with publishing this medical misinformation? When this is over they will have to account to the public why they were complicit in withholding a cure for covid.

"These are not at all people who should handle data for publication, much less with influence in policy-making."

Even in the best of times:

Why Most Published Research Findings Are False

John R A. loannidis

Published: August 30, 2005 • https://doi.org/10 1371/journal.pmed.0020124

Abstract

Summary

There is increasing concern that most current published research findings are false. The probability that a research claim is true may depend on study power and bias, the number of other studies on the same question, and, importantly, the ratio of true to no relationships among the relationships probed in each scientific field. In this framework, a research finding is less likely to be true when the studies conducted in a field are smaller; when effect sizes are smaller; when there is a greater number and lesser preselection of tested relationships; where there is greater flexibility in designs, definitions, outcomes, and analytical modes; when there is greater financial and other interest and prejudice; and when more teams are involved in a scientific field in chase of statistical significance. Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias. In this essay, I discuss the implications of these problems for the conduct and interpretation of research.