“Nothing travels faster than the speed of light, with the possible exception of bad news, which obeys its own set of laws.” –Douglas Adams

During the summer of 2011, I took up an invitation to teach at a math camp at Cornell University. It was one of those math camps designed to introduce undergraduate level mathematics to pre-college students, with an emphasis on problem solving. Since I only had time to teach for one week, I co-taught a course on combinatorics (counting techniques with some probability and statistics) with two other instructors. One of them was Walter Stromquist, Editor in Chief of Mathematics Magazine. Getting to know Walter was the highlight of my summer and while I was there he asked me to referee some submissions to the journal, a first time experience for me.

During my third year in college, I jumped ship when Wall Street came calling. I never finished an undergraduate degree in mathematics, much less a graduate degree. Taking a job trading bonds at D.E. Shaw & Company didn’t require learning topology or algebraic geometry, so my catalog of mathematical knowledge is less broad than that of a research mathematician. As such, I could not referee one of the papers Walter offered up to me. But the other two were in my wheelhouse. I gave one of them a strongly positive recommendation after checking all the work.

The other paper, however, tested me on an ethical level that weighed convenience versus fundamental verification. The author analyzed game show math involving the spinning of a big wheel as on “The Price is Right”. He worked out how to algorithmically handle decisions along the way. I understood both the problem and proposed method of solution just fine, but the computations were ugly enough that neither did the author include them, nor did I want to sit with a notebook for the several hours it might take me to verify them. When it came to the details, the paper stated that a program had been written, from which the calculations were performed.

After thinking through what to do with this paper, I spoke to Walter. I explained that I didn’t understand how I was supposed to evaluate work that I wasn’t presented with. Walter didn’t comment. He just listened. After seeing that he didn’t want to bias my decision with anything like a standard procedure, I politely declined to review it feeling that I wasn’t able to quickly resolve my ethical dilemma. I felt fairly certain the author was entirely honest and that he almost surely wrote a valid program that achieved a correct answer. Walter accepted that, though he never gave me his opinion or experience with how such issues of computational blindness are most often handled in the publication of such papers.

In hindsight, had I felt more free with my time (I was working a full day during what was otherwise my vacation) and were I more familiar with the sorting of such dilemmas in the process, I might have emailed the author and asked for the code, read it to a point of understanding, and then tested it both on the problem itself and also on a more trivial version of the problem that I could check by hand. Satisfied, I could then happily recommend publication of the paper.

By late May, the tide had begun to turn in the hydroxychloroquine (HCQ) debate. Even prior to HCQ's Trump moment on March 19, 2020, hospitals were stocking up on HCQ and CQ, presumably to use them in treatment of COVID-19 patients. Numerous articles reported the Sermo surveys showing solid support among doctors for using the drug to treat COVID-19, a national stockpile of HCQ had swelled due to support from manufacturers, the Association of American Physicians and Surgeons (AAPS) was actively promoting HCQ treatment, and numerous tales reached the media of doctors successfully using HCQ to treat COVID-19 patients (here, here, here, here, and elsewhere) including high riskpatients in nursing homes.

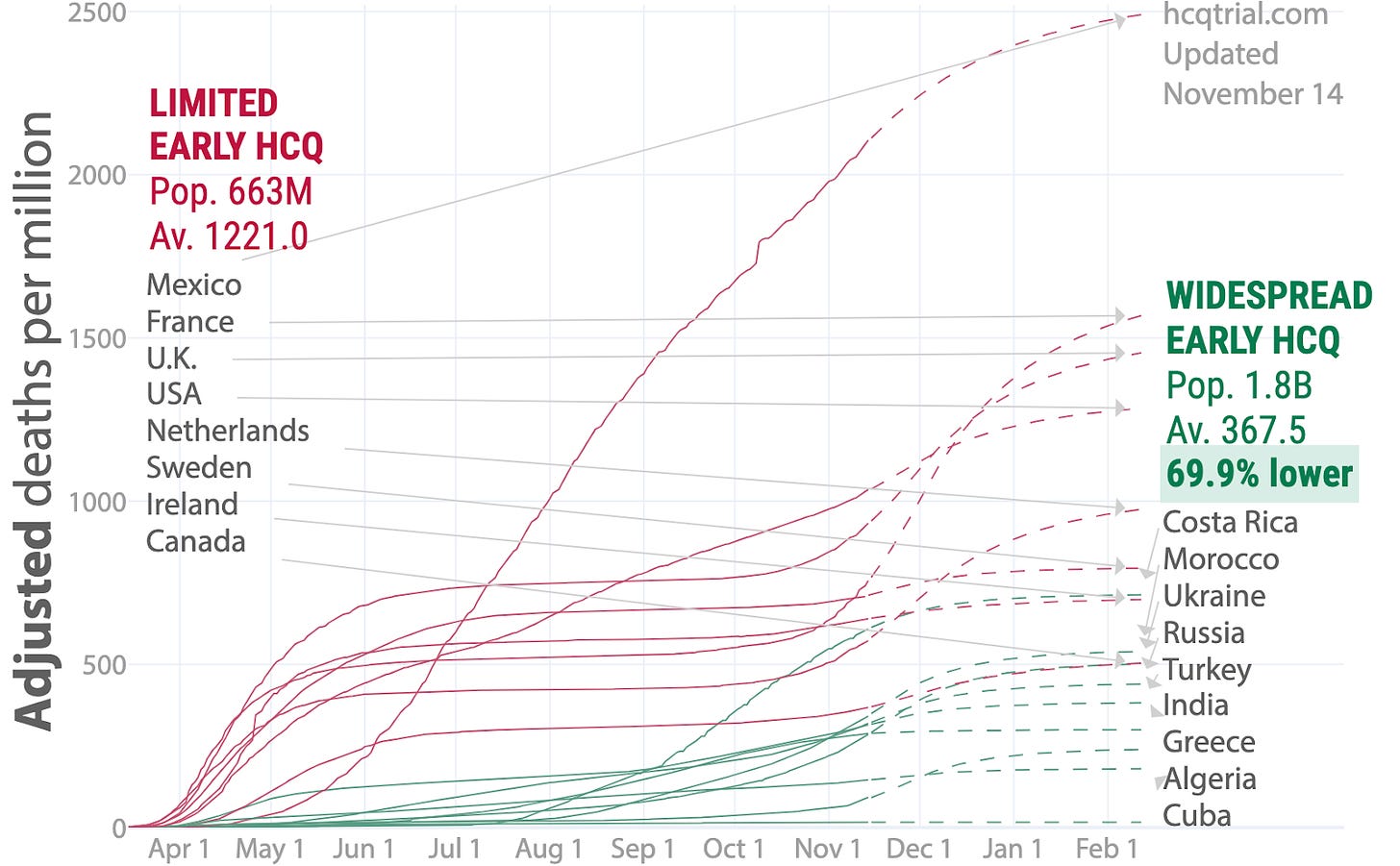

HCQ was more popular as a treatment and prophylaxis abroad than in the U.S. and nations in its close sphere of influence. Following lobbying by a cadre of Asian and Western nations, India and Pakistan were exporting stocks of HCQ to scores of nations all over the world in a dramatic act of diplomacy. Americans and those in other nations not using any or much HCQ began to see inevitable information about how many nations using HCQ or CQ saw fewer get sick or die. Still, the larger part of the American and more broadly Western media were consistent in their narrative that HCQ was irresponsible, dangerous snake oil.

There were also close to 20 published studies showing anywhere from mild benefit to tremendous benefit for COVID-19 sufferers taking HCQ, and a few more in preprint. Of the handful of studies showing no benefit, almost none of them showed any significant harm except in experimentally high doses (here and here) that could be easily avoided. On the whole, it appeared that only the U.S., the UK, France and a small handful of nations in their sphere of influence were dragging their feet while running out of excuses and trying to convince the public that masks would better protect them.

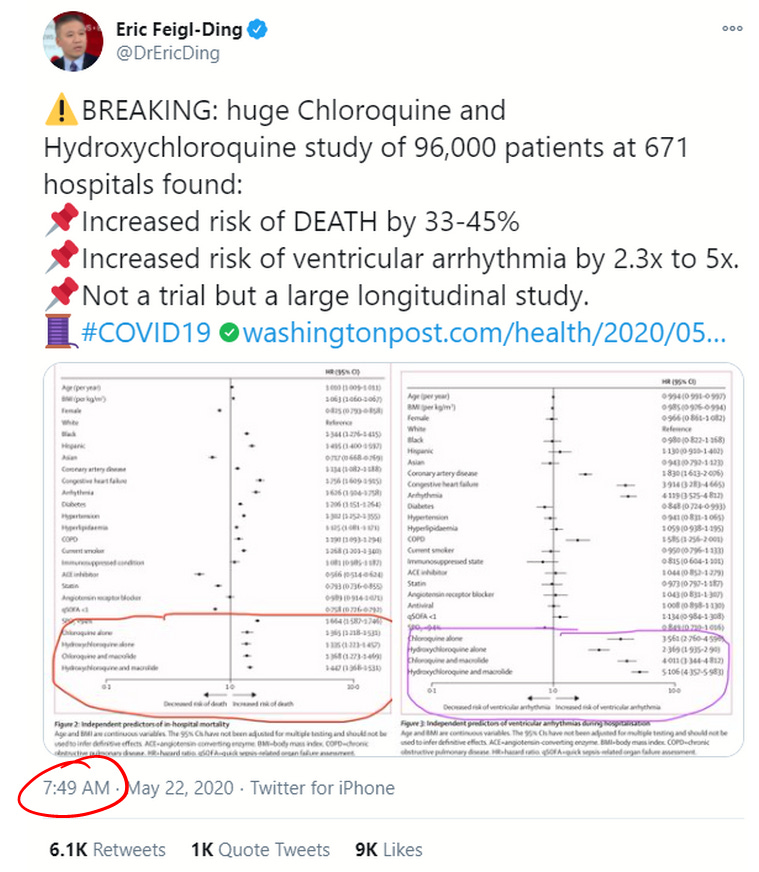

On May 22, all that seemed to change. That day, the prestigious medical journal The Lancet published a study by a self-styled healthcare analytics company called Surgisphere. The paper, entitled “Hydroxychloroquine or chloroquine with or without a macrolide for treatment of COVID-19: a multi-national registry analysis,” displayed an analysis of around 96,000 COVID-19 patients of whom around 15,000 were treated with HCQ or CQ at 671 hospitals around the world and within the December 20, 2019 to April 14, 2020 date range. The study concluded that patients receiving HCQ or CQ died at a substantially higher rate (33.7% to 44.7% alone or in combination with a macrolide, raised to 46.8% and 57.5% for HCQ and CQ, respectively, after "statistical adjustments") than those not treated with the drugs.

The news travelled fast through the mainstream news outlets. CNN ran its first report on the Surgisphere paper at 9:13 a.m. (Eastern U.S. time zone) the day of its publication, quoting one of its co-authors. Four minutes later, the Washington Post published an article on the Surgisphere results including quotes from expert cardiologists at prestigious research institutes:

“It’s one thing not to have benefit, but this shows distinct harm,” said Eric Topol, a cardiologist and director of the Scripps Research Translational Institute. “If there was ever hope for this drug, this is the death of it.”

David Maron, director of preventive cardiology at the Stanford University School of Medicine, said “these findings provide absolutely no reason for optimism that these drugs might be useful in the prevention or treatment of covid-19.”

The article goes on to mention that Gilead’s drug, remdesivir “has shown promise in decreasing recovery times,” citing just one study.

While the major media outlets each published their stories on the Surgisphere study, what we might call the “science media” also quickly reported the bad news for HCQ. StatNews, MedScape, and ScienceNews reported on the massive retrospective with various levels of reinforcement of the Trump association, and without detailed analysis of the results.

On social media, the news was trumpeted by many as a “Game Over” moment. Twitter-COVID-megastar Eric Feigl-Ding tweeted the study’s conclusions like a billboard (emphasis on death his):

Feigl-Ding made numerous comments about the data throughout his early morning 23 tweet storm, mostly delivered in under an hour. This makes me wonder when he first laid eyes on the study results. In his nineteenth tweet on the subject, Feigl-Ding wrote,

Feigl-Ding added this interesting piece of commentary: “Balanced means no baseline confounding. Akin to what you might see in a trial usually, except it wasn’t. Very reassuring.” This comment was echoed by the lead author of the study, Mandeep Mehra, who concluded in an interview with FranceSoir that the study, “represents the equivalent of an actual test of this set of drugs,” referencing RCTs in a way that seems to imply that enough observational data precludes the need for an RCT.

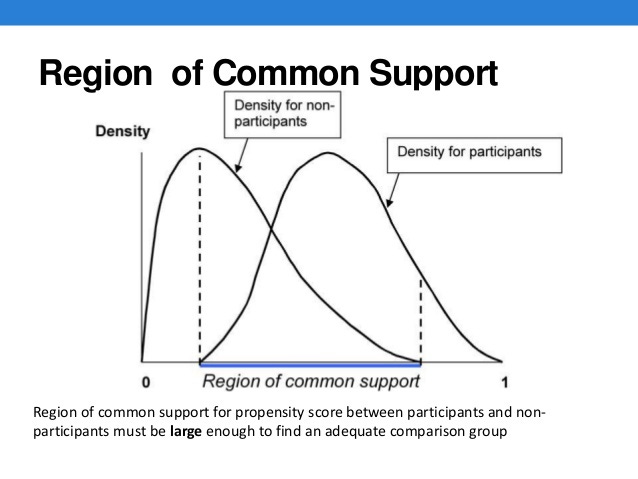

Propensity score matching is a common statistical method for teasing out data from observational studies for the sake of more direct comparison and removal of confounding bias. This method generally brings the results of observational studies closer in line with the value of clinical trials. However, to any degree which the population of the treated group differs from the untreated group, valid application becomes more difficult. And if the populations are different by kind (all children versus all adults, all men versus all women, all severe cases versus only mild ones), then the data has no value as a synthetic controlled study.

Feigl-Ding’s observation and Mehra’s claim were that, over this very large set of data, the statistics for the patients treated and the patients not treated by HCQ/CQ were so similar that the Surgisphere study stood out as the ideal case scenario for declaring a single massive study of observational data all but equal in value to a massive clinical trial. Were such a claim accepted by the research community, that would certainly end all hopes for the HCQ Hypothesis.

In the days after the publication of the Surgisphere paper, the French Minister of Health Olivier Veran hastily banned the use of HCQ for treatment outside of clinical trials, then quickly also suspended those clinical trials. The WHO suspended their own clinical trials of HCQ, citing safety fears, then began to send strongly worded communications to nations using the drug, urging them to immediately stop using it to treat COVID-19 patients. Trials testing HCQ efficacy were canceled in several nations. Some nations stopped using HCQ to treat patients while others did not. Dr. Fauci stated definitively that HCQ proved not to be an effective treatment, adding, “The scientific data is really quite evident now about the lack of efficacy,” though he stopped short of recommending a ban on HCQ's use in treatment.

It was in these very moments in late May when public health officials lost all credibility---a credibility that cannot be restored without major changes taking place. Despite most evidence pointing to a likelihood of HCQ efficacy, they made their call on the back of data supposedly tucked away in a database that nobody had verified, declared it definitive, then swiftly pushed for policy changes around the world. But the terrifying reality is that from the very start, the entire study looked like a hoax prima facie in so many ways that anyone competent with statistics and experienced with medical data should never have accepted the results without serious questioning. Not anyone of good faith, anyhow. The details as to why will be explained in the second article in this story.

A most illuminating article. Many many thanks. Just a pedantic remark, prima facia should read prima facie.

Surgisphere Study == Peer reviewed FRAUD

Do check out the Swiss experience with HCQ. The 2 week draw down and build up are very interesting.

https://i.postimg.cc/Pr1FVpM4/HCQ-Switzerland.png