"Truth will ultimately prevail where there is pains to bring it to light." -George Washington

See How to Rig Research: Surgisphere Part I.

A few hours after The Lancet published the Surgisphere paper online, I began my first brief look through the details. Stunned, I got up and took a walk outside to shake off my shock.

Just over two months earlier, I started following the hydroxychloroquine (HCQ) story upon witnessing hydroxychloroquine's Trump moment. It astonished me how politically the media seemed to approach this matter of medicine---and during a time when media of all sorts were rotating through pictures of people supposedly dying in the streets and dragging the body of anyone who died of COVID-19 through the social media gutters. How did the media, not to mention the army of better-educated-than-thou Never Trumpers, manage to ascertain in a matter of hours or days, despite suspiciously zero discussion or knowledge of HCQ, an informed understanding that HCQ was "snake oil"---so firmly that anyone who thought there was a chance it could work was a charlatan even if they happened to be the most well published researcher in the history of infectious disease research? It made no sense to me, so I began following both the media and the research. Fortunately, I'm no stranger to reading medical or scientific papers even though I haven't been in a lab (aside from my wife's) in 25 years.

While following the HCQ story unfold and chatting with friends about it just a little on and off social media, several people in medicine, research, and public health reached out to me to provide their first-hand account of HCQ's effect on COVID-19 patients. Some of these were former students of mine now working in medicine or research in the United States, India, or other countries. In each case, they witnessed the drug's positive to strongly positive effects on patients. "Nobody here dies," one told me. Through them, I established a few new contacts, and the story was always the same: HCQ works. In combination with my own reading of all the research through late May, I suspected that HCQ would win out as the standard of care for treating COVID-19 patients and that the media and Never Trumpers would have egg on their face. While I don't bother much with partisan politics, I figured that was a necessary lesson for everyone to learn.

So, on May 22, I read through the abstract of the Surgisphere paper and felt dumbstruck. I stood up and went for a walk, thinking through the two dozen or so research papers I'd read. In a moment of doubt, I wondered: could it really be true that HCQ fell flat, and everything I had been personally told about successful treatment by dozens of doctors and researchers around the world was simply false? That seemed plausible, even in the face of my newly established priors, but a 40% or 50% mortality increase due to application of a historically safe drug seemed to me to be highly unlikely.

I went back to my office and began to dig through the details of the Surgisphere paper in order to better understand.

That evening, my wife and I took our usual post-dinner walk. After a short time, I told her that I didn’t believe the paper could be real. I suspected fraud.

Busy with her own next scientific publication, she had not yet read the blockbuster article, having seen only the media blips declaring "game over" for HCQ. She looked at me skeptically and asked, “Why do you think that?”

My wife is herself a research scientist. She conducts medical and genetics research, has a PhD in biochemistry and degrees/backgrounds in finance, economics, and bioterrorism research. While working primarily on cancer research during the early months of the pandemic, she had not followed the HCQ controversy very closely to this point in time, though we had discussed it a bit during the previous weeks. Appropriately, she put on her skeptic's hat and forced me to answer for my opinion.

Why did I think that?

I skipped past the part she already knew, which was my standing bias based on HCQ research, data, and communications with researchers and doctors in numerous countries. I cannot recall exactly what I told her, and it didn't sound as good coming out of my mouth as it made sense in my head, but my points went something like this, except less precise, without citations, and with some somewhat intelligible mumbling in place for actual numbers:

If the mortality hazard ratios were truly as high as claimed, we would expect many nations to have reported such observations by now. The only published studies with hazard ratios higher than “around 1” compared more severe cases treated with HCQ to less severe cases not treated, so hazard ratios around 1.5 seemed implausible.

The ability to gather data from 671 hospitals on six continents seems like a project for a small army. Health care bureaucracy is notoriously restrictive. Had the FDA or CDC managed to collect that data, I would have assumed some sort of international cooperative effort with scores of coauthors. But four guys with some kind of new database? When did machine learning make the leap to wading through Byzantine health privacy laws over dozens of nations on six continents? Perhaps it's less painful for a bot to remain on hold for an hour to speak with an appropriate hospital administrator to gain permission for data, but it's still an hour.

The claimed proportion of men (53%) hospitalized with COVID-19 was lower than many reports I had read in the press and in research papers. I expected a number near 60% due to all the reports of COVID-19 sex skew among nations with outbreaks through the data endpoint in April (here, here, here, and here).

In the Surgisphere paper, only 16% of cases were treated with HCQ or CQ. This neither matched my mental picture of how most nations facing early outbreaks were using it (China, South Korea, Italy, Greece, Belgium, Spain, Switzerland+more, Bahrain, the UAE, Thailand, Indonesia, Finland, Venezuela, Costa Rica, Panama, El Salvador, Malaysia, Singapore, Morocco, Lebanon, Turkey, Paraguay, Algeria, Egypt, Uganda, Serbia, the entire Indian subcontinent where most HCQ is manufactured, and so on), U.S. hospitals ratched up orders of it, and American doctors wrote so many prescriptions for it, nor the Sermo survey results from early April indicating that HCQ was by far the most popular option among doctors. Given the exponential growth in COVID-19 cases, the data should be dominated by late March and early April cases that would align increased HCQ/CQ use abroad and with doctors who participated in that survey. I was not and still not sure if that 16% number is in the ballpark of correct, so this was a tertiary point in my case.

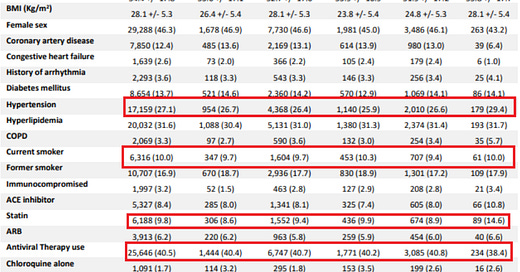

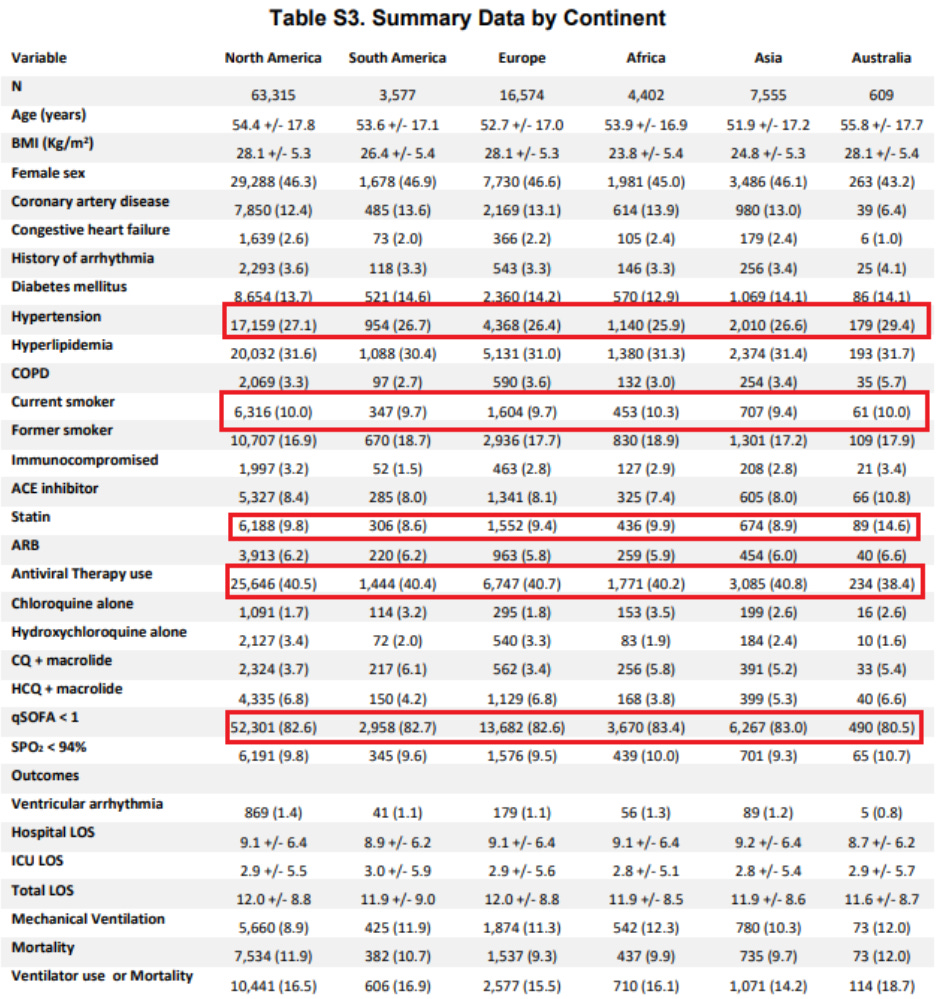

Here is where the Surgisphere data begins to get really weird. The proportions treated across continents just barely varied from that 16% number: North America (15.6%), South America (15.5%), Europe (15.3%), Africa (15%), Asia (15.5%), and Australia (16.2%). That doctors in all nations, experiencing the pandemic differently would gravitate toward such a highly stable proportion on all continents around the world defies all credulity. Even if some magical fractal of treatment acceptance governed such a statistic, the exponential growth of cases on different timetables make such uniformity absurdly unlikely. (Table below cut and adjusted in form, presenting original data.)

Zooming up from the numbers in this table into “macrolide vs. no macrolide” categories, the resulting proportions are still similarly uniform, aside from Australia (with macrolide: 10.5%, 10.3%, 10.2%, 9.6%, 10.6%, 12%). Let's try another one: the higher aggregate of HCQ or CQ usage (10.2%, 9.3%, 10.1%, 9.3%, 7.8%, 8.2%). Not quite as low in variance, but still surprisingly uniform for medical treatment data of any kind. These look like the kinds of results one gets from writing code to generate cases at random, but the coder didn't know how to generate structural randomness well enough to throw off people with good number sense (or just mediocre number sense and experience with data).

Numerous other lines of data included numbers tightly enough clustered across continents that they defied all my experience with data of any type that I ever encountered anywhere, including a few thousand scientific publications read or scanned. Some of these are shown later in this article.

The proportional use of CQ seemed far too high in at least some continents. While China chose to use CQ for still unclear reasons (even their published pharmacokinetic research recommended HCQ), most continents aside from possibly Africa use HCQ in far greater quantities than CQ. At the very least, I hadn’t even heard of a nation in either Europe or North America using CQ much at all.

Given the publication of around 20 students showing positive benefits of HCQ or CQ

Even if we take all the data in the study at face value, absolutely none of it speaks to the Primary HCQ Hypothesis that early treatment of COVID-19 with HCQ in an appropriate cocktail succeeds, nor was there any apparent attempt to disentangle the protocols that could very possibly lead to a Simpson's paradox in the conclusion.

Not only did the study look fraudulent on face, it looked like something one might expect after sending two twenty-year-old Pre-Med students, hardly yet literate in medical data, to the library for an afternoon, with the task of faking research data. Or perhaps from a couple of cardiologists making up data outside of their field? That question is at least worth investigating.

I was so convinced that the data was fraudulent that I began to discuss the paper with several friends and quickly found out that other people had their suspicions and were themselves investigating the Surgisphere corporation's background. Discussion took place rapidly and opposition to the publication organized quickly.

On May 26, French researcher and HCQ advocate Dr. Didier Raoult, the aforementioned most-published infectious disease researcher now often called a "charlatan" despite his tremendous results treating COVID-19 patients, tweeted observations about the strange Surgisphere data. These largely overlapped with my own, but with nice red boxes around several lines with directly apparent low variance that should at least raise eyebrows:

This tweet also indicates a strong likelihood that Dr. Raoult's UHI, which was growing famous for treating thousands of patients with HCQ by the end of May, was inexplicably excluded from the Surgisphere study. Presumably Raoult and his colleagues would have been happy to include their success as part of a mass data gathering project.

Around the same time, statisticians, researchers, and internet sleuths brought a host of additional observations to light. These included,

The number of deaths attributed to Australia was greater than the total number of deaths in the entire continent prior to April 21, a full week after the stated end date of the collection data. This seems particularly unlikely given that Surgisphere claimed to take that data from only five hospitals on the continent. As it turns out, only one hospital on the continent reported more than two deaths at that point.

The hospitals included in the study were not named, which is highly unusual for a research paper that depended on their cooperation and efforts.

In an essay published online on May 29 by James Todaro, MD (non practicing medical school graduate), entitled, “A Study Out of Thin Air,” he notes that the North American data in the Surgisphere paper looks highly suspicious. Out of likely around 66,000 COVID-19 hospitalizations by the study inclusion end date of April 14, 63,315 of these were supposedly in the Surgisphere database. It would be a historically amazing feat of maneuvering bureaucracy, worthy of its own epic book, movie, or perhaps a campfire song, to have wrangled that much data out of those hospitals with highly variable regulations and procedures, nearly all of which are in the U.S. healthcare system.

Adding my own observation upon seeing Tardaro's complaint: There are more than 6,000 hospitals in North America, and Surgisphere claimed to compile data from 559 of them. It takes a preposterous suspension of disbelief to accept that 96% of COVID-19 hospitalizations took place at just 9% of the hospitals. Certainly there were concentrations of cases, but not at the more than 200x factor these numbers hilariously suggest.

The data from Africa implied common usage of sophisticated electronic equipment still rare on the continent.

The dosages reported in the study do not even make sense as they do not match known treatment norms.

The reported confidence intervals in the statistical analysis did not seem to match up with the case numbers.

There was no ethics review for the study!

The release of code and data is standard practice in the machine learning and statistics community. The Surgisphere team never released either.

Requests for information about the study went consistently unanswered.

Many of these anomalies and others were included in an open letter to the Surgisphere research team and also editor-in-chief of The Lancet, Richard Horton, signed by scores of scientists and physicians from around the world.

Even worse than the long list of flaws we have discussed already, biopharmaceutical therapeutics developer Dr. Steven Quay pointed out that the study data seemed to indicate that the harm done by those patients treated with HCQ/CQ was never disentangled from the harm done by ventilators, which is an unusually obvious variable not to correct for in such a "landmark study".

Additionally, attempts to locate hospitals that participated in the study proved entirely fruitless. Nearly a year later and (so far as anyone seems to know or find with internet searches), no single hospital in the world has come forward to affirm their participation in the Surgisphere study.

It can be maddening to think that such flimsy work could pass the test of peer review at the world's most prestigious medical journal with a nearly-200-year reputation, that none of the dozens of researchers on the COVID-19 Treatment Guidelines Panel recognized the long list of flaws, that Dr. Anthony Fauci was totally fooled, and that the WHO with all its experts and resources completely bought it without question. And all despite the scores of nations using HCQ to treat patients, without any sense that it caused the claimed increase in mortality---one more suggestion that should have defied belief.

To date, nobody among these authorities is pushing for any investigation into the Surgisphere corporation, much less the suspicious circumstances around which their supposed research changed treatment policy around the world.

Knowing all this, how can anyone ever again trust this conglomeration of medical science gatekeepers and health policy makers?

On June 4, The Lancet retracted the Surgisphere paper, finally saying that they could “no longer vouch for the veracity of the primary data sources.” Their statement suggests that they thought at some point that they could indeed vouch for the data, which would seem surprising. Three weeks later, the NEJM retracted the earlier (unrelated) published Surgisphere study, the only other paper in Surgisphere's publication history.

In a future article, we will explore the history of Surgisphere, its staff, and its relationships with the medical and public health communities. We will then examine potential motivations for what could be a medical crime with a body count that may reach into the millions.

Matthew,

Thank you for the thorough discussion in parts 1 and 2. Has part 3 been published, I would be interested to read if so. Thank you

I am just now delving into this. I Googled "why did Trump mention hydroxychloroquine" and ran across an article in Forbes, a Timeline of Trump's championing of HCQ. In the first paragraph they link to this study, which is stamped as 'retracted' so I came here and searched for 'surgisphere' - So basically two years later and Forbes has not updated or corrected their original article. https://www.forbes.com/sites/andrewsolender/2020/05/22/all-the-times-trump-promoted-hydroxychloroquine/?sh=5f5f9bc34643