Flawed Interpretation of Mask Research Driving Public Conflict and Confusion

The Chloroquine Wars Part XXII

Recently, in a discussion group (of people of generally high intelligence, put together by a friend) I participate in, somebody pointed out a story from the 2006 SARS outbreak.

From that article:

I will go ahead and say that at the outset of the pandemic, I knew nothing about debates and studies of mask usage in relation to virus spread. My first order common sense though was that they probably work just a little, and maybe the really good ones (N95 or better) worked moderately well. When I tried to read up on aerosolization there was sparsely little information that seemed useful (and I now recommend this Wired article that explains part of that story). So, as I'm sure is true for many people, my opinion on mask usage took shape slowly over several months. My opinion is now informed by several dozen studies, including the relative quality of study designs. In the meantime, I got to see a lot of debate online, some of which reached heated and sometimes mean-spirited proportions.

Back to the discussion thread in question. Following the initial post above, another member of the group responded thusly:

Here is the linked study mentioned in this comment by somebody who likely saw it 10 or almost 11 months ago and felt that it was conclusive. This was about the fiftieth time I've seen this study as it quickly became one of the most talked about papers in the history of science due to the topical nature, general public anxiety, and abnormal partisanship over anything and everything pandemic related. As such, it is very possibly the study misunderstood by the greatest number of readers in the history of science. At least, it's in the running. In order to understand why, let us examine the study and what it actually measures.

I'm going to stop at this moment and encourage those of you who do not usually read scientific research to read the [primary] paper (the Appendix will be too much for most people not accustomed to technical reading, but the technical minds will want to at least skim that for details). Try to read the study critically to form your own observations and questions. Keep an open mind about data relationships or conclusions. In particular, ask yourself if there are any realistic confounding variables that might be the sources of observed effects during the studied time frames.

Now, let us start with the study title:

Nothing too provocative so far. The title itself does not pedal a sensational conclusion. The third paragraph summarizes the weak state of evidence to date of mask efficacy for other viruses. Nothing too controversial there. However, the next paragraph discusses changing attitudes toward masking from nations around the globe, and different national guidelines during the pandemic, entirely sans data or analysis. This one is a head scratcher. Is that meant to be evidence of anything? What does this paragraph add to the study?

Next, I'd like to focus on the "Limitations" section of the study. A section like this should weigh in any reader's mind as attached to any evidence suggested in the "Conclusion". This section points out that the results of the study represent "the intent-to-treat effects of these mandates" and continues, "that is, their effects as passed and not the individual-level effect of wearing a face mask in public…" This is where we likely see the greatest amount of confusion, both among the public and among many articles and government sources I've seen cite this paper. This study does not purport to show that face masks are effective. In fact, that is clear in the Conclusion section, though readers unfamiliar with the terminology, or unaware that a study could say anything other than "masks work/don't work", may misread it:

The study provides evidence that US states mandating the use of face masks in public had a greater decline in daily COVID-19 growth rates after issuing these mandates compared with states that did not issue mandates.

Emphasis mine.

Now, if you are unfamiliar with intention-to-treat analysis, read the full article behind this link if you have the time to commit. Or, consider the following observations:

Lyu and Wehby are clear about the fact that they are unable to measure adherence rates. And if you read down into the McCoy article (same last link) on intention-to-treat analysis, the author points out that adherence to treatment historically confers additional unexplained benefits beyond either clinical or placebo effects. So far as I can tell after multiple readings, Lyu and Wehby make no mention at all of this effect, and perform no correction for it. Perhaps there is a level of conscientiousness among those who "take their medicine" that results in additional behavioral changes. People who take the step to "treat" an ailment often take additional steps which themselves contributed to the "truth" of the outcome. For instance, it is understood that Tamiflu works on only some influenza strains, but not others. Somebody who takes Tamiflu on day 1 of flu symptoms may also take multivitamins and eat chicken soup, one or the other of which might shorten flu duration (here, here, here, and here). Did somebody who took Tamiflu and had a short-duration flu benefit from the Tamiflu? Additional analysis would be required to separate all the variables. Could people in counties with mask mandates take more vitamin supplements, otherwise change their diets, have added UV light to their HVAC systems, or other precautions? The Lyu and Wehby study does not go far enough to make conclusions on that front. These effects may subsume the entire effect size of the study, meaning that nothing in this study necessarily measures the effects of masks at all.

Is the psychology behind the intention-to-treat enough to affect self-reporting of mild symptoms? Mild COVID-19 cases might go ignored and unreported, particularly in people who aren't old or frail. The Lyu and Wehby study was undertaken during a time period during which Americans were learning that and were perhaps beginning to act on knowledge of relative risks of the disease. It is very possible that counties with mask mandates correlate with changing attitudes toward the relative risks of the crisis in a way that changed their time to reporting of symptoms (or whether they sought clinical diagnosis at all).

A foundational assumption in an intention-to-treat analysis is that the study "analyzes the patients according to the groups to which they were originally assigned." Clearly Lyu and Wehby were unable to control the migration patterns of the American populace. This objection is not simply theoretical. I doubt that the U.S. saw this much short-term or long-term migration at any other point during my life. But let's not rely on my intuition when there are scores of articles discussing and confirming that observation. Most specifically, Americans fled cities in blue counties where there were harsher public policy mandates into red counties where there were less harsh mandates. Perhaps a reviewer should have pointed out that this entire study fails the basic requirement of an intention-to-treat analysis. The excuse, of course, will be that this paper was fast-tracked to publication. Because...that's always a good excuse for ignoring the basics. A more interesting analysis might be to study whether those hundreds of thousands of New Yorkers who fled the Big Apple seeded viral outbreaks in counties that didn't mandate masks because they otherwise had no prior cases. That some of those moves were not permanent does not change the important aspect of all that moving around. As such, this paper fails to adhere to the most basic standards of its study type.

The placebo effect is a tricky thing. The psychological (psychobiological) effects are sometimes measured, but certainly not fully understood. The observed effects have changed over time in ways that keep researchers baffled, often making it hard to separate "true effects" from placebo effects.There is little way to account for potential divergence of psychological and other placebo effects in a study such as this one.

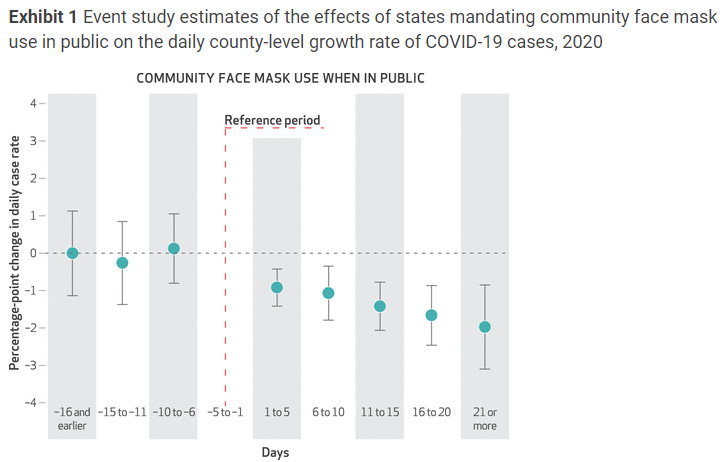

Next, let us consider the basic logic behind the claim made in this study. The study claims to show that mask mandates resulted in lower rates of change of COVID-19 cases, and in particular that around half of the effect occurs during the first five days.

The problem here is that COVID-19 is the disease state that results from viral infection, which is itself downstream from the process of wearing masks, which is itself downstream from mandating mask wearing. This is for a virus with a five day incubation period that sometimes leads to symptoms (the COVID-19 disease state that is this paper's focus) around 8 to 15 days later! And this observation leads to additional questions:

How quickly do mask mandates change adherence levels? How soon after symptoms begin do patients undergo a procedure that could diagnose COVID-19? Given the already enormous lag in exposure-to-symptoms, this paper would need to measure other such steps in causality to even measure anything meaningful.

Why would the paper's reviewers, who should certainly be well versed in the "Niche 101" lessons I've brought up in this article not suggest holding out for longer data periods, or suggesting a secondary baseline measurement period starting at a lag from the mandate that represents the minimal sum of all the aforementioned delays in observation of effects?

Really, I could go on for a few dozen more paragraphs, but suffice it to say that this paper does not strike me as meaningful on a basic level of logic, though that's all wrapped in a scientific veneer that should make reasonable people step back and wonder what happen happened to the institutions that most represent science. While this is another few articles waiting to be written, I think the answer is quite clear: they've been corrupted by money more deeply than at any previous moment in history.

With basic logic out of the way, I'd now like to strip away the scientific veneer and put into plain words what this paper measures so that it becomes clear that this analysis did not go the right way about performing its task. This study measures the rate of change of the rate of change of COVID-19 cases with respect to the variable of mask mandates. To put it another way, this paper measures the second derivative of that function (and mostly during a time period when the variable's effect should not yet be seen).

So, what kind of function are we talking about?

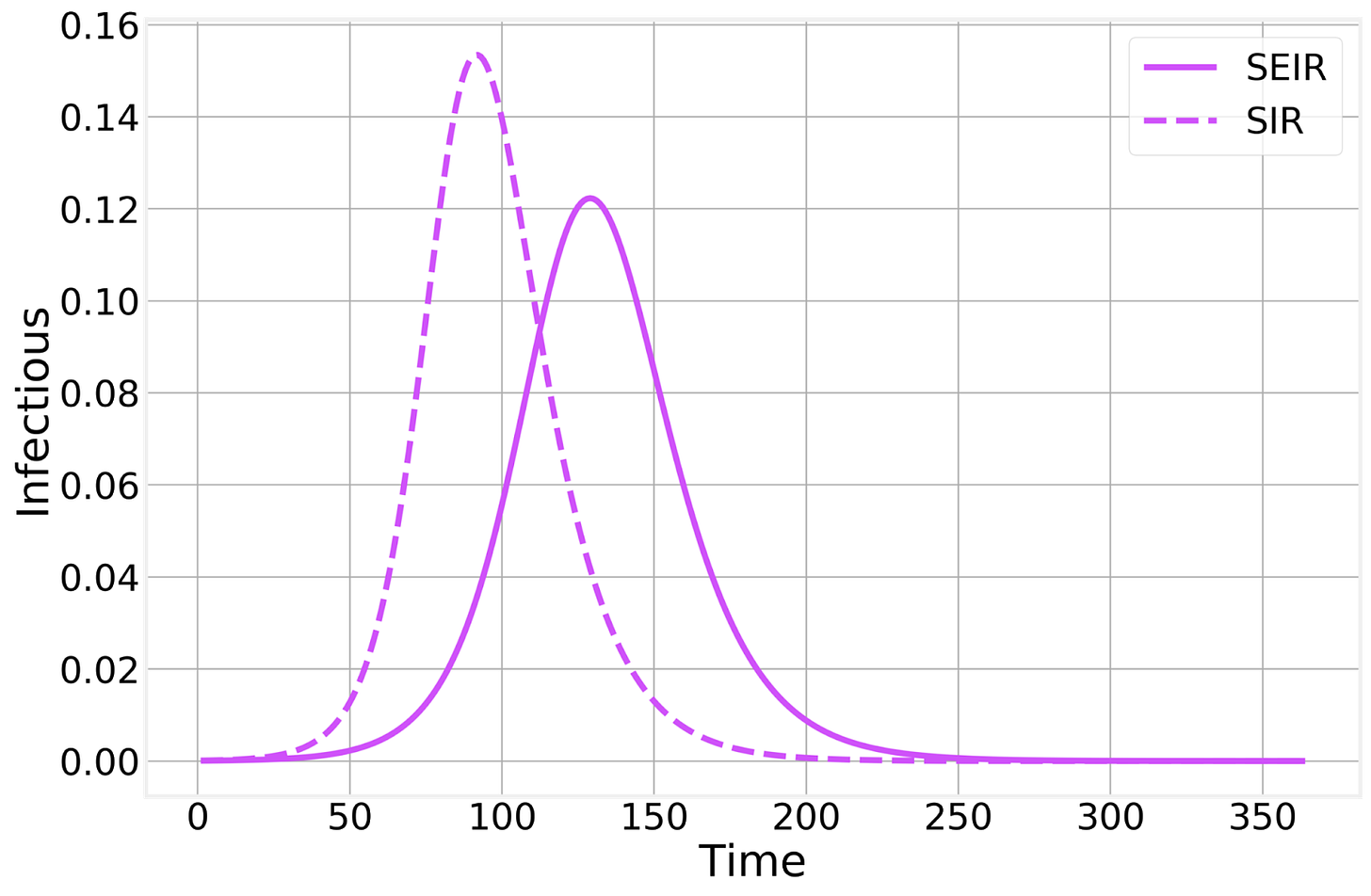

The basic epidemiological model here is the SIRS model.

While technically, the math and modeling can get quite deep, including examination of population graph connectivity, stochastic (random) "superspreaders", decaying fractal patterns, and whatever other real-world toppings you want to pile onto that banana split, the primary epidemiological function has an image (output) that you've seen many times before. The SEIRS model adds an "exposed" population. These are not quite traditional bell curves (symmetric normal distribution) or sinusoidal wave, but shares some physical features with each:

You might compare these idealized curves (shapes) with any real world data such as COVID-19 cases (Worldometer) during two waves of infection/disease in Greece.

Now, here is the defining question that tells whether or not you're ready to read a paper such as this one and understand the data modeling well enough to judge/follow the science:

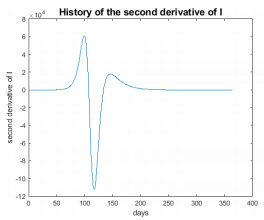

What happens to the second derivative of the function/output during the rise and fall of a typical case curve?

Take a moment on this. Then take a moment to ask whether or not the authors give any indication of this exploration in the paper or its Appendix (if you read that far...I know, it's ten times as long as the paper).

Here is a succinct description of the first derivative of an ideal (per wave) epidemiological curve/function: It starts at zero, gets big quickly, hits zero at the curve peak, then goes negative fairly quickly, then flattens out at zero at the end of the epidemic.

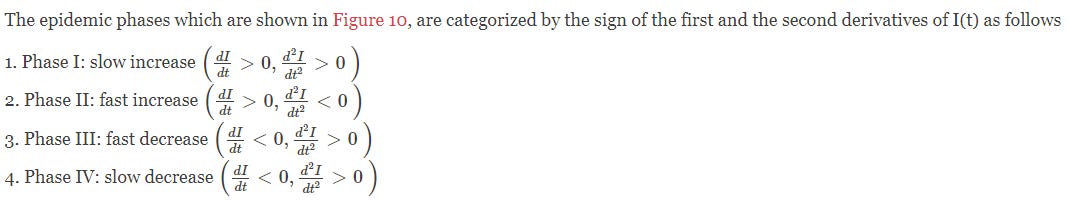

Here is a succinct explanation as to what happens to the second derivative:

(Pre-epidemic): The second derivative begins flat.

(Epidemic Start): The second derivative quickly gets large (positive).

(Middle-ish of Rising Curve): The second derivative passes back through zero and turns negative.

(Peak Epidemic): The second derivative has a large, negative magnitude.

(Epidemic Winding Down): The second derivative passes back through zero and turns positive.

(Post-epidemic): The second derivative has gone from positive back to flat again.

This paper verifies my observations/claims in different words:

Note that the only time the second derivative is negative (Phase II above) is around the peak of an epidemic. Or we could say that the second derivative is "less positive" at the tails (start or end of a "wave"). Here is an example graph:

At this point, my primary question becomes: Why would the outcome measured in the Lyu/Wehby study be perceived as associated with COVID-19 outcomes?

On face, it does not. At best it tells us about how counties that mandated masks cluster differently on epidemiological curves. And given that heavy population centers were hit by waves earlier, and those skewed heavily toward the mask mandate pool, we all would have guessed that, anyway.

An interesting attempt at generating value might have been to see whether or not mask mandates successfully flattened the curve. That would require matching dates of mask mandates with relatively depressed (positive or negative second derivatives) magnitudes of epidemiological curves that follow, and after taking into account the summation of all the time lags mentioned earlier.

But that's not what happened.

At best, this is not good modeling or else it's a bogus representation of the model.

At worst, the authors are entirely aware that positivity/negativity of the second derivative of an epidemiological curve tells us where we are on the curve more than anything. That would mean that they designed propaganda, not science.

And this certainly should not be one of the most widely read scientific papers in history---a paper pushed hard by the media and by "pandemic partisans" on social media. It was fast-tracked garbage, delivered with something that looks like a modified Angus Beef stamp (found in the Appendix). And that the CDC cites it as part of its evidence pool suggesting that masks do in fact work should make every American question whether there are competent people at the CDC, or whether it's all just political. I have my own suspicions.

Does showing all the virus on the outside of the mask represent a projected bias? Hmmmm.

Hi Mathew,

Thanks for all your great work!

Here is a link to the pdf version of the article, for those who want to browse/review it per your suggestion but not pay for it:

https://www.wichita.gov/Coronavirus/COVID19Docs/Community%20Use%20of%20Face%20Masks%206.16.2020.pdf

I could not find the appendix; however.

Request to other readers:

Honestly, I am confused about masks...even after digging into the topic somewhat.

Anti-mask arguments (which, after my research, I find somewhat more compelling than the pro-mask arguments, like the one that Mathew just reviewed in this blog post) seem to go as follows:

SARS-Cov-2 is mostly spread by aerosols and not droplets Therefore, regular cloth masks are useless as they mostly prevent spread by droplets and are not effective in blocking aerosols.

However, another argument against masks is that they are dangerous because of the build-up of carbon dioxide.

Aren't these two arguments contradictory? Anyone who is well versed on this topic, please respond. Also, other resources (articles, videos, etc) that will help shed some light are welcome.

Thank you!